Apertus.Home

Contents

[hide]Websites

The official Apertus Website is now located at: www.apertus.org

This Wiki entry is being restructured for future use.

Work In Progress

FPGA

Reading the dvinfo thread you can find someone (Z.H.) that is making some mod. to the current Fpga;

25 may 2007 - this project by Z.H. is in stand-by and he offer up his project for somebody else to take it over.

"If somebody's interested, here's the BloodSimple(TM) codec I wrote and experimented with. It creates a bitstream directly from the bayer input, always taking the difference of pixels as input. It works best if we use the algorithm in interframe mode, which means that we calculate the difference of pixels from the same coordinate of the previous frame. This of course needs that whole frame in memory.

An output sample always begins with a one bit flag (F) which tells the decoder if the following sample has the same bit length (F=0) as the previous one (a length of P) or it is greater with G bits (F=1). This G constant can be set according to the input stream and it will tell the contrast ratio of the generated images: with a T bit input, following pixels usually don't have more difference than T/2 bits. Visually lossless is around T/3 but it can be calculated explicitly in a pre-encoding step if needed. (By having the motion blur of the 1/24sec exposure, the blur caused by fast pans won't cause quality loss in the encoder as it would do with shorter exposure times.) If F=0, the decoder uses the previous P bit length but if the sample's most significant L bits are zero then the next sample will be taken with P-L bits (if that sample's F=0). The encoder knows this and if the next sample's length is greater than P-L then it sets F=1. L is usually set to T/4.

With sample videos (no real bayer input, only some grayscale mjpeg stuff with some artifical noise added) it has produced a 2:1 average ratio which means 2.5:1 with low freq content and 1.5:1 with high freq content (ie. trees with leaves with the sky as background). Because the code is so simple one can use several module instances in an fpga, for example, one for each pixel in a 20x20 block (or whatever the Elphel uses), working in paralell with only the memory bandwith setting the performance limits. However, with a smaller no. of instances it may fit beside the theora module; altough two output streams aren't supported yet. And it's really easy to implement it in verilog which can be a concern if somebody's not an expert"

"I'm afraid there are no verilog programmers here but somebody might pick it up. As for other bayer cameras, I'm not aware of any other which could be freely programmed like the Elphel. And the compression rate is pretty low (just enough for the Elphel, hopefully), perhaps it might be combined with some other algorithm to get better results."

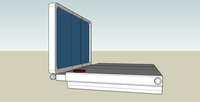

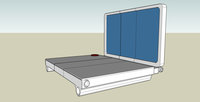

This is a previous prototype by Oscar Spierenburg with the Tablet PC placed on top of the camera.

Shell-Design

Final part list:

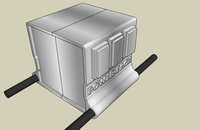

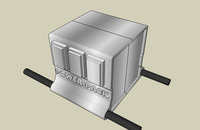

Sensor-Shell

Controller-Shell with buttons

Currently the PC.

Recording-Shell

Battery-Shell

Screen-Shell (TFT Monitor)

Audio-Shell

The ideal audio shell would be as follow : Provide two phantom powered (48v) XLR inputs with switches for each input to enable/disable phantom power (required for professionnal mics) and to choose if the input is mic or line level. Some had success using beachtek/juicedlink type adapters. They convert XLR input to mini jack output. So in the first incarnation, this could be a simple "line in" without volume control.

A good quality pre amp can be made using a velleman kit, cfr. http://rebelsguide.com/forum/viewtopic.php?t=1803&sid=c9da5241552feeee72941b44b6ff6e60 the kit is found here : http://www.vellemanusa.com/us/enu/product/view/?id=350491 Note that it doesn't include phantom power. // I think phantom power is a must.

The ideal system would be an opensource audio board.

Intelligent-Handle (USB connected) with ball and joint socket.

This could serve as joint for the handle and wears 6kg by $55.

(do you know of anything else?)

The parts should be produced in aluminium or/and abs-plastic. All designs need to be open source. It would be good to find a university or shopowner who would participate on this project without personal benifits.